Author Affiliations

Abstract

Beijing National Research Center for Information Science and Technology, Department of Electronic Engineering, Tsinghua University, Beijing 100084, China

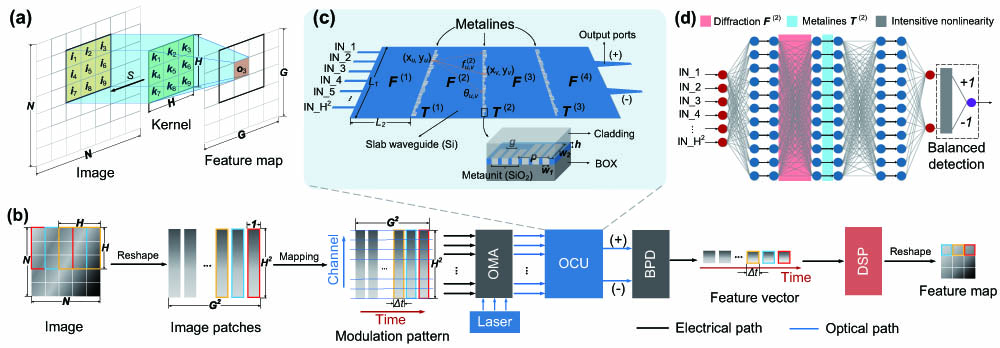

Ever-growing deep-learning technologies are making revolutionary changes for modern life. However, conventional computing architectures are designed to process sequential and digital programs but are burdened with performing massive parallel and adaptive deep-learning applications. Photonic integrated circuits provide an efficient approach to mitigate bandwidth limitations and the power-wall brought on by its electronic counterparts, showing great potential in ultrafast and energy-free high-performance computation. Here, we propose an optical computing architecture enabled by on-chip diffraction to implement convolutional acceleration, termed “optical convolution unit” (OCU). We demonstrate that any real-valued convolution kernels can be exploited by the OCU with a prominent computational throughput boosting via the concept of structral reparameterization. With the OCU as the fundamental unit, we build an optical convolutional neural network (oCNN) to implement two popular deep learning tasks: classification and regression. For classification, Fashion Modified National Institute of Standards and Technology (Fashion-MNIST) and Canadian Institute for Advanced Research (CIFAR-4) data sets are tested with accuracies of 91.63% and 86.25%, respectively. For regression, we build an optical denoising convolutional neural network to handle Gaussian noise in gray-scale images with noise level , 15, and 20, resulting in clean images with an average peak signal-to-noise ratio (PSNR) of 31.70, 29.39, and 27.72 dB, respectively. The proposed OCU presents remarkable performance of low energy consumption and high information density due to its fully passive nature and compact footprint, providing a parallel while lightweight solution for future compute-in-memory architecture to handle high dimensional tensors in deep learning.

Photonics Research

2023, 11(6): 1125

Author Affiliations

Abstract

1 Key Laboratory of Photoelectronic Imaging Technology and System of Ministry of Education of China, School of Optics and Photonics, Beijing Institute of Technology, Beijing 100081, China

2 Beijing National Research Center for Information Science and Technology (BNRist), Department of Electronic Engineering, Tsinghua University, Beijing 100084, China

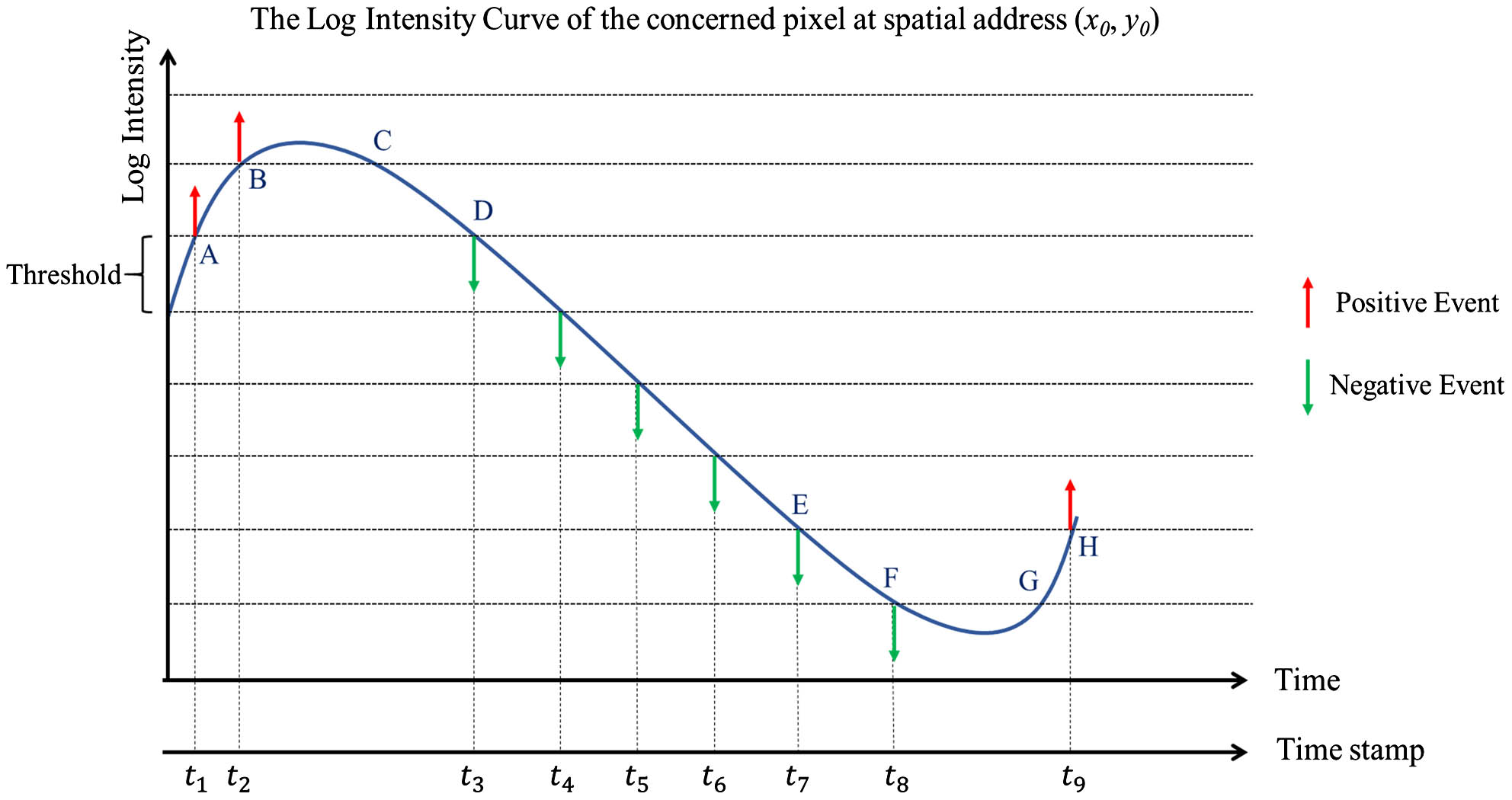

Non-line-of-sight (NLOS) imaging is an emerging technique for detecting objects behind obstacles or around corners. Recent studies on passive NLOS mainly focus on steady-state measurement and reconstruction methods, which show limitations in recognition of moving targets. To the best of our knowledge, we propose a novel event-based passive NLOS imaging method. We acquire asynchronous event-based data of the diffusion spot on the relay surface, which contains detailed dynamic information of the NLOS target, and efficiently ease the degradation caused by target movement. In addition, we demonstrate the event-based cues based on the derivation of an event-NLOS forward model. Furthermore, we propose the first event-based NLOS imaging data set, EM-NLOS, and the movement feature is extracted by time-surface representation. We compare the reconstructions through event-based data with frame-based data. The event-based method performs well on peak signal-to-noise ratio and learned perceptual image patch similarity, which is 20% and 10% better than the frame-based method.

non-line-of-sight imaging event camera event-based representation Chinese Optics Letters

2023, 21(6): 061103

Author Affiliations

Abstract

1 Beijing National Research Center for Information Science and Technology (BNRist), Beijing 100084, China

2 Department of Electronic Engineering, Tsinghua University, Beijing 100084, China

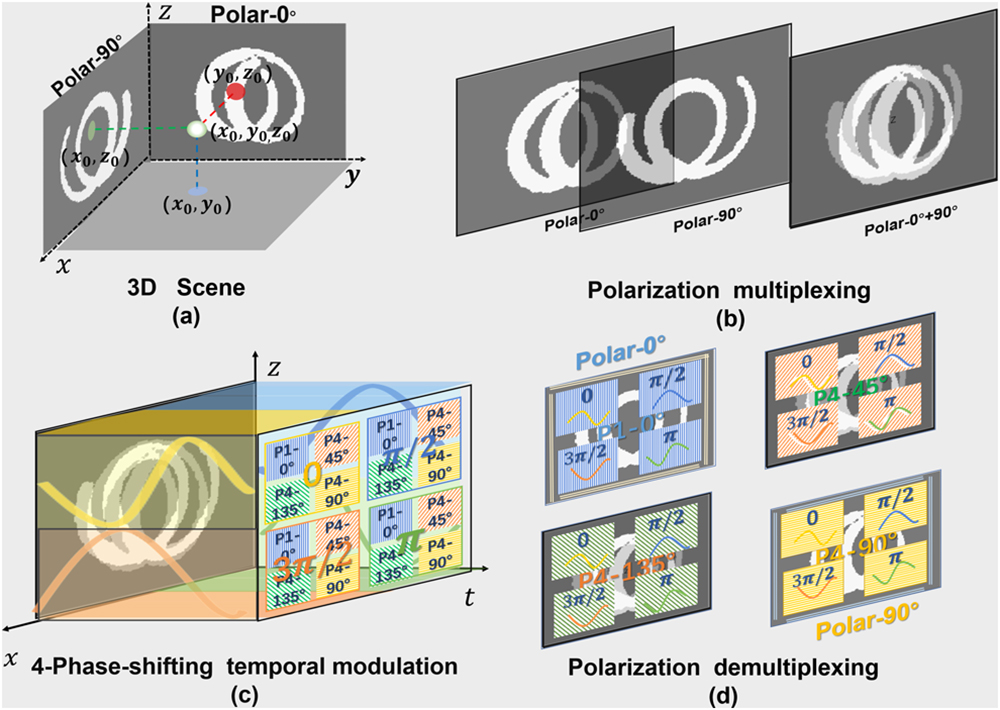

For moving objects, 3D mapping and tracking has found important applications in the 3D reconstruction for vision odometry or simultaneous localization and mapping. This paper presents a novel camera architecture to locate the fast-moving objects in four-dimensional (4D) space (, , , ) through a single-shot image. Our 3D tracking system records two orthogonal fields-of-view (FoVs) with different polarization states on one polarization sensor. An optical spatial modulator is applied to build up temporal Fourier-phase coding channels, and the integration is performed in the corresponding CMOS pixels during the exposure time. With the 8 bit grayscale modulation, each coding channel can achieve 256 times temporal resolution improvement. A fast single-shot 3D tracking system with 0.78 ms temporal resolution in 200 ms exposure is experimentally demonstrated. Furthermore, it provides a new image format, Fourier-phase map, which has a compact data volume. The latent spatio-temporal information in one 2D image can be efficiently reconstructed at relatively low computation cost through the straightforward phase matching algorithm. Cooperated with scene-driven exposure as well as reasonable Fourier-phase prediction, one could acquire 4D data (, , , ) of the moving objects, segment 3D motion based on temporal cues, and track targets in a complicated environment.

Photonics Research

2021, 9(10): 10001924

Author Affiliations

Abstract

1 Department of Electronic Engineering, Tsinghua University, Beijing 100084, China

2 Beijing National Research Center for Information Science and Technology (BNRist), Beijing 100084, China

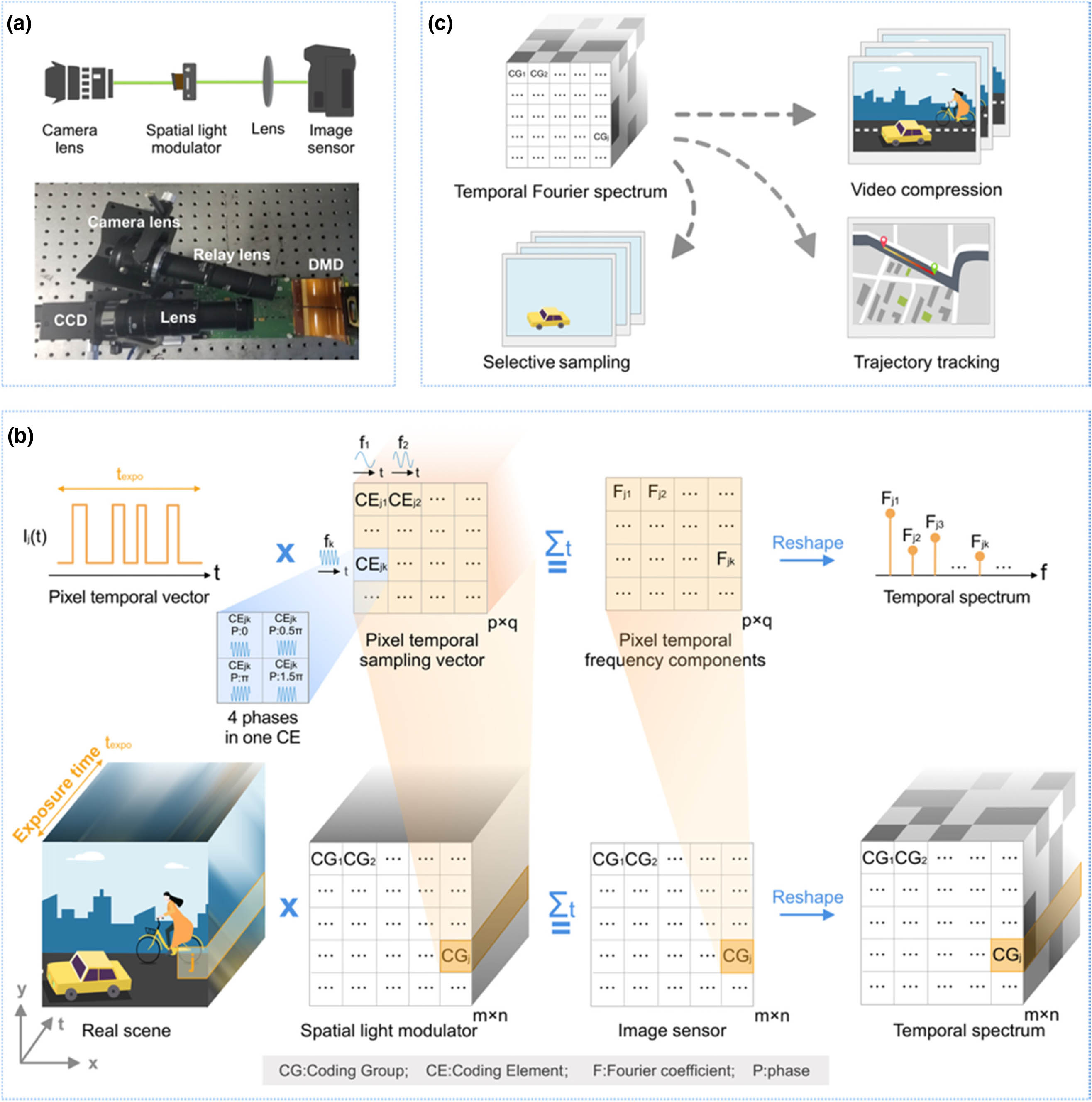

The novel camera architecture facilitates the development of machine vision. Instead of capturing frame sequences in the temporal domain as traditional video cameras, FourierCam directly measures the pixel-wise temporal spectrum of the video in a single shot through optical coding. Compared to the classic video cameras and time-frequency transformation pipeline, this programmable frequency-domain sampling strategy has an attractive combination of characteristics for low detection bandwidth, low computational burden, and low data volume. Based on the various temporal filter kernel designed by FourierCam, we demonstrated a series of exciting machine vision functions, such as video compression, background subtraction, object extraction, and trajectory tracking.

Photonics Research

2021, 9(5): 05000701